API Gateway logs are crucial for the security of modern platforms. Insecure logging can lead to data breaches, GDPR violations, and loss of trust. Here’s how to manage logs securely and efficiently:

- Log only necessary data: Log essential information such as timestamps, HTTP methods, paths, and status codes. Avoid sensitive data like passwords or credit card numbers.

- Integrity and encryption: Use immutable storage solutions and encrypt logs with AES-256 and TLS/SSL.

- Real-time monitoring: Automate alerts for suspicious activities such as repeated failed logins or unusual traffic.

- Observe compliance: GDPR, PCI DSS, and SOX require strict measures for data minimization, deletion, and secure storage.

Tip: Use JSON for structured logs to facilitate analysis and comply with regulations. Tools like ELK or Datadog help manage large volumes of logs. Secure logging not only protects data but also optimizes operational security.

Insufficient Monitoring & Logging - API Security #7 (German)

Basic Rules for Secure API Gateway Logging

Secure logging in an API gateway is based on three central principles. These form the foundation for a stable security architecture and help avoid costly compliance violations. Here’s how to effectively implement these principles.

Log only the essentials

Log only the data that is truly necessary. Too many logs can impair system performance and incur unnecessary storage costs. Focus on essential metadata such as:

- Timestamps

- HTTP methods

- Paths

- Client IP addresses

- Status codes

- Response times

This information is sufficient to quickly identify issues and trace security incidents without exposing sensitive data.

Avoid storing complete payloads or critical information such as passwords or personal data. Sensitive fields like Authorization headers or credit card numbers should either be masked or completely excluded from the logs. An example of a secure, structured log entry in JSON format might look like this:

{

"timestamp": "2025-04-12T10:00:00Z",

"request_id": "abc123",

"client_ip": "203.0.113.1",

"method": "GET",

"path": "/v1/data",

"status": 200,

"latency_ms": 45

}

Protect the integrity of the logs

Immutable logs are essential for forensic analysis and compliance. Use technologies like WORM storage (Write Once, Read Many) and cryptographic hashing to prevent tampering. Additional security can be provided by methods such as blockchain-based logging, digital signatures, or immutable storage solutions.

This is particularly relevant for platforms like Gunfinder, where transaction logs serve as proof of business transactions. The integrity of this data is crucial not only for cybersecurity but also for legal matters.

Encryption is mandatory

Protect logs through encryption—both at rest and in transit. Use proven algorithms like AES-256 for stored data and TLS/SSL for transmission. Regularly update encryption keys and restrict access to authorized personnel.

Consistent encryption prevents attackers from accessing unprotected logs and extracting valuable information for further attacks.

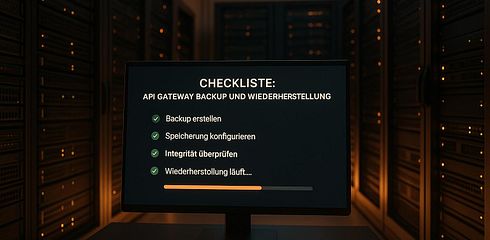

Complete Checklist for Secure API Gateway Logging

Here you will find a practical checklist that helps you effectively implement secure API gateway logging and avoid common mistakes. It covers all relevant aspects to ensure reliable and secure logging.

Set up structured logging

A structured logging format like JSON is ideal as it is compatible with modern log management tools and facilitates automated analysis. A well-designed log entry should contain the following information:

- Timestamp in ISO 8601 format

- Request ID for tracking

- Client IP address

- HTTP method and path

- Status code of the response

- Response time

Additionally, log levels such as INFO, ERROR, and DEBUG help control the detail level of the logs. For normal operations, INFO is sufficient, while ERROR should be used for authentication issues and DEBUG for in-depth diagnostics during development. Dynamic adjustments of log levels allow you to quickly access more detailed information during incidents.

Track critical API actions

All CRUD operations (Create, Read, Update, Delete) should be consistently logged. This means that details such as user ID, action type, affected resource, timestamp, and the result (success or failure) must be recorded. For example, in a profile update, user ID, the type of action, the affected resource, and the status (successful/unsuccessful) could be logged.

Different log types serve different purposes and should be configured accordingly:

| Log Type | Purpose | Important Fields/Actions |

|---|---|---|

| Access Logs | Tracking requests/responses | HTTP method, URI, status, client IP, latency |

| Error Logs | Recording errors | Error messages, stack traces, plugin crashes |

| Audit Logs | Logging changes | User access, policy updates, plugin changes |

| Custom Logs | Capturing business-specific events | Custom metadata, plugin-level activity |

Particular attention should be paid to authentication and authorization events. This includes login attempts, access grants, and denials. These events can indicate security issues early on. Once critical actions are logged, it is important to simultaneously detect unusual activities in real-time.

Monitor suspicious activities

Setting up real-time alerts is crucial for early threat detection. Suspicious activities may include:

- Multiple failed authentication attempts

- Access from unusual or blocked IP addresses

- Sudden spikes in traffic

Automated monitoring systems should look for specific indicators, such as repeated failed logins, access from blacklist IPs or foreign networks, and unusual request rates. Log management tools can set up rules that trigger notifications or even block actions in case of suspicious behavior.

For platforms like Gunfinder, which process sensitive data, continuous monitoring is particularly important. Sensitive data such as Authorization headers or credit card numbers should be masked. For example, Authorization headers can be replaced with "[REDACTED]" and credit card numbers anonymized except for the last four digits.

Regular reviews of log configurations are essential to ensure compliance with legal requirements and operational needs. Without proper logging, security breaches can remain undetected for an average of 280 days, according to the IBM Cost of a Data Breach Report.

sbb-itb-1cfd233

Compliance and Monitoring Requirements

Compliance with legal regulations is a key factor in gaining and maintaining user trust. At the same time, modern monitoring methods can help efficiently meet these requirements and reduce costs. Below, you will learn how to successfully implement legal requirements.

Meet legal requirements

In Germany and the EU, strict compliance requirements apply to API gateway logs. The most important regulations include:

- GDPR (General Data Protection Regulation): It mandates the protection of personal data and grants the right to deletion.

- SOX (Sarbanes-Oxley Act): This focuses on precise financial reporting with traceable audit trails.

- PCI DSS (Payment Card Industry Data Security Standard): Regulates the secure processing of payment information.

These regulations directly influence how logs must be managed. For example, credit card numbers must not be stored in logs, and personal data such as IP addresses or user identifiers must be specially protected.

| Compliance Requirement | Recommended Action | Typical Deadline/Rule |

|---|---|---|

| GDPR | Data minimization, deletion obligation | Deletion upon request, max. 2 years |

| PCI DSS | No storage of credit card data in logs | Immediate masking |

| SOX | Traceability, audit trails | 7 years retention |

JSON logs offer an advantage here as they are machine-readable and allow for automated checks. Tools like ELK (Elasticsearch, Logstash, Kibana), Loki, or Datadog ensure comprehensive logging. For platforms that process sensitive data, such as Gunfinder, consistent and secure logging is indispensable.

Manage log storage and deletion

A clear strategy for log retention is essential to comply with regulations. A tiered storage concept can help:

- Hot Storage: Logs from the last 0–30 days for active troubleshooting.

- Warm Storage: Indexed and compressed logs for a period of 1–6 months.

- Cold Storage: Long-term archiving for 6+ months to cover compliance requirements and rare investigations.

The GDPR requires that personal data can be deleted upon request and generally not be stored for longer than two years. Automated deletion strategies prevent human errors and ensure deadlines are met.

Cloud services like AWS CloudTrail offer features for automated archiving and deletion. These can be configured to manage logs based on predefined policies while meeting legal requirements.

Control storage costs

In addition to compliance, cost control plays an important role. An intelligent storage strategy can help reduce expenses. Compressed logs for long-term storage are cost-effective, while tiered storage systems ensure that frequently needed data remains quickly accessible.

According to a 2024 Postman survey, 66% of developers use API gateway logs for troubleshooting in production environments.

This shows how important it is to find a balance between cost efficiency and availability.

Monitoring tools should continuously monitor storage usage and trigger alerts when thresholds are reached. It is advisable to store only the necessary information. Detailed debug logs are often unnecessary in production and can be dynamically activated when needed.

Centralized solutions like Elasticsearch, Splunk, or Skywalking simplify the management of large log volumes. They not only provide unified monitoring but also support compliance with automated archiving processes.

Common Mistakes and How to Fix Them

Even experienced developers make logging mistakes that can create security risks. Often, these result from unclear guidelines or a lack of understanding of compliance requirements. However, with the right measures, many problems can be avoided.

Logging sensitive data

A common and serious mistake is the accidental storage of sensitive information. This includes passwords, tokens, or credit card numbers that should never appear in logs.

For example, in 2023, a fintech company suffered a massive data loss because sensitive data was stored unencrypted in logs. Attackers gained access to these logs through a misconfigured storage bucket.

The solution? Proactive protective measures. Use data masking at the gateway level before information is even written to the log. Many API gateways offer plugins that can automatically redact sensitive fields.

A particularly risky scenario is logging complete request payloads without any masking. This can lead to sensitive data such as user credentials being captured. Platforms like Gunfinder, which work with sensitive transaction data, must proceed with particular care and consistently filter.

To ensure that no sensitive data is logged, you should regularly review your log configurations and conduct audits. Automated scans can help identify problematic entries early on.

In addition to filtering sensitive data, continuous monitoring of the logs is crucial.

Insufficient log monitoring

Another common mistake is insufficient or missing log monitoring. Without effective monitoring, security incidents such as unauthorized access or attacks often go undetected. This leads to prolonged exposure and potentially more severe consequences.

Suspicious patterns such as repeated failed login attempts or unusual traffic spikes can easily be overlooked without appropriate monitoring. This significantly increases the risk of data breaches and violations of compliance requirements.

According to a 2024 Postman survey, 66% of developers rely on API gateway logs to debug issues in production.

This highlights the importance of a well-monitored logging infrastructure. Centralized log management solutions like the ELK Stack, Graylog, or Datadog provide real-time analysis, alerting, and visualization of large log volumes.

Set up automated alerts to be notified of anomalies such as unusual traffic, authentication errors, or suspicious API calls. Structured logs in JSON format also facilitate filtering and analysis.

| Problem | Impact | Solution |

|---|---|---|

| Sensitive data in logs | Data breaches, GDPR violations | Automatic masking, regular audits |

| Missing monitoring | Delayed attack detection, prolonged exposure | Automated alerts, real-time analysis |

| Unstructured logs | Difficult evaluation, missed threats | JSON format, uniform structure |

Integrate your API gateway logs into central security operation platforms like Google SecOps or AWS CloudWatch. Such tools facilitate monitoring and support compliance with regulations.

Regular reviews of the logs for suspicious activities should be an integral part of your security strategy. Document all logging practices to demonstrate compliance with legal standards during audits.

Conclusion: Key Points at a Glance

Secure API gateway logging plays a central role for e-commerce platforms like Gunfinder when it comes to data protection, compliance, and smooth operation. Careful implementation protects both the company and customers from security incidents and legal issues. This foundation serves as the starting point for further security measures.

Central Security Steps

The fundamentals of secure API gateway logging are based on three crucial principles: data minimization, encryption, and continuous monitoring.

A structured logging in JSON format allows for precise analysis and facilitates the correlation of events. This way, suspicious activities can be detected more quickly, and GDPR requirements can be met. Especially for platforms like Gunfinder, which process sensitive transaction data, it is essential to consistently mask passwords, payment information, and personal data.

End-to-end encryption, both during transmission and at rest, protects against unauthorized access. Together with strict access controls and regular audits, a stable security foundation is created.

Well-structured and secure logs are essential for timely detection of security incidents. Without proper monitoring, attacks could go unnoticed—a risk no company can afford.

Compliance-compliant storage with clearly defined retention periods and automated deletion minimizes legal risks while saving storage costs. The use of correlation IDs also enables seamless tracking of requests in complex, distributed systems. Concrete measures can be derived from this foundation.

Next Steps

- Review your current logging configuration: Find out if sensitive data is being logged unprotected and implement masking routines if necessary.

- Automate monitoring and alerting: Tools like the ELK Stack or Datadog help quickly identify anomalies such as unusual traffic, authentication errors, or suspicious API calls.

- Train your team: Raise awareness among employees about the importance of secure logging to avoid mistakes like accidentally logging sensitive data.

- Conduct regular audits: Document your log processes to meet compliance requirements and ensure a stable security infrastructure in the long term.

Investing in a secure logging strategy pays off—through fewer security incidents, lower legal risks, and greater operational stability.

FAQs

How can you ensure that no sensitive data is stored in the logs of your API gateway?

To ensure that sensitive data does not end up in the logs of your API gateway, you should mask or completely remove critical information such as passwords, credit card data, or personal data before storage.

Additionally, it is advisable to regularly review the logs to ensure that no confidential data has been accidentally logged. Also, be sure to carefully manage access rights so that only authorized personnel have access to the logs. This helps significantly reduce the risk of unauthorized access.

How does real-time monitoring of API gateway logs help detect security incidents early?

The real-time monitoring of API gateway logs helps you immediately recognize suspicious activities and act accordingly. This way, you can detect security incidents early and quickly take the necessary steps.

By continuously analyzing the logs, unusual patterns such as unauthorized access attempts or abrupt spikes in requests can be directly identified. This significantly reduces the risk of security gaps.

What legal requirements apply to the logging of API gateway logs and how can they be met?

The logging of API gateway logs must strictly comply with applicable data protection laws, such as the GDPR. It is crucial to collect personal data only when absolutely necessary. Additionally, appropriate protective measures must be taken to ensure the security of this data.

To comply with legal requirements, you should consider the following points:

- Data minimization: Log only the information that is truly necessary.

- Access controls: Ensure that only authorized personnel have access to the logs.

- Encryption: Use encryption both during transmission and when storing the logs.

Furthermore, it is advisable to regularly review the logs and securely delete old or no longer needed data after a defined period. This ensures that legal requirements are consistently met.